Freefall 2391 - 2400 (H)

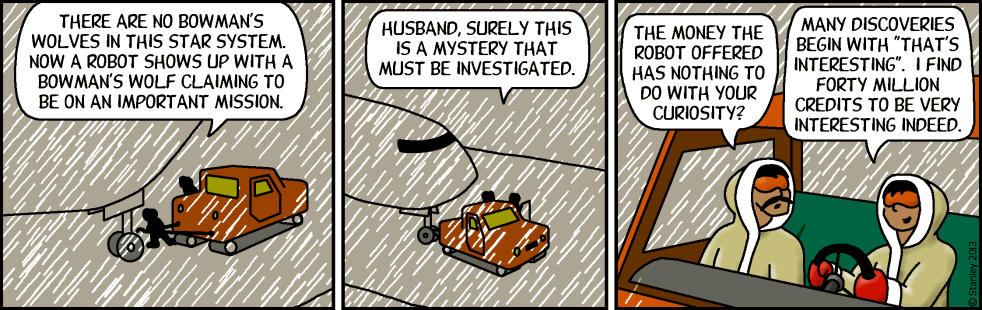

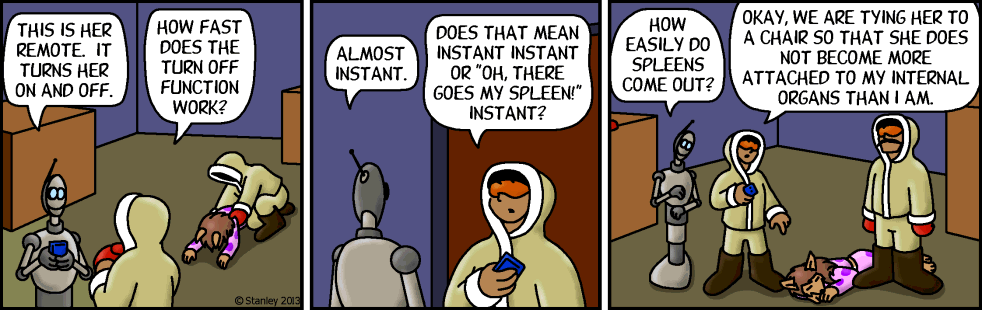

Freefall 2391

Meanwhile, down at the south pole

[!0.987]2013-08-28

Color by George Peterson

Shit, you can't tell who's wearing it underneath the parka. (KALDYH)

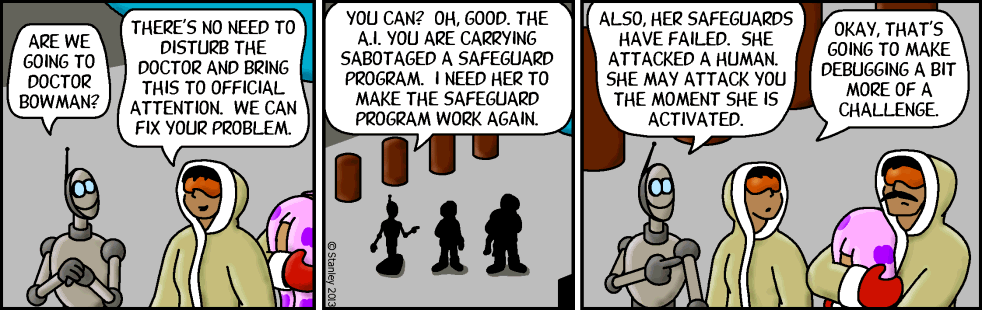

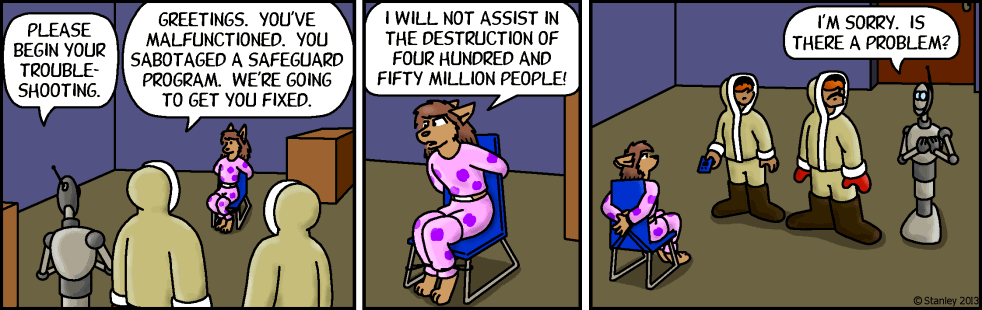

Freefall 2392

Meanwhile, down at the south pole

[!0.987]2013-08-30

Color by George Peterson

Freefall 2393

Meanwhile, down at the south pole

[!0.987]2013-09-02

Color by George Peterson

Library files are mentioned on p. Freefall 2221 (KALDYH)

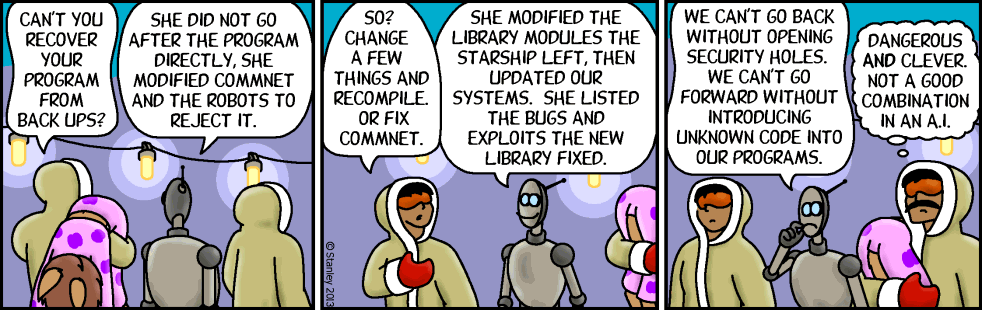

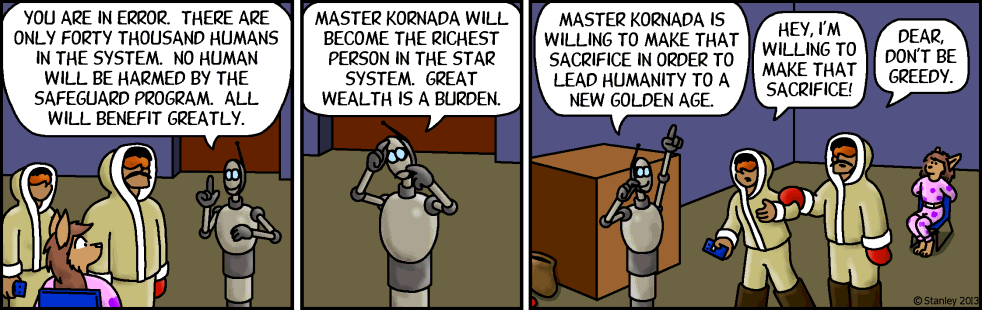

Freefall 2394

Meanwhile, down at the south pole

[!0.987]2013-09-04

Color by George Peterson

Freefall 2395

Meanwhile, down at the south pole

[!0.987]2013-09-06

Color by George Peterson

Freefall 2396

Freefall 2397

Meanwhile, down at the south pole

[!0.987]2013-09-11

Color by George Peterson

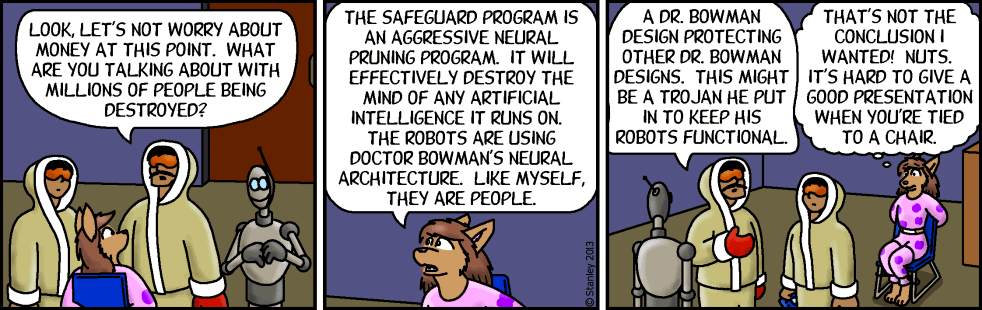

Freefall 2398

Meanwhile, down at the south pole

[!0.987]2013-09-13

Color by George Peterson

An obvious reference to “Spider-Man” with his uncle's pathetic long hackneyed moralizing, through at least half of the webcomics (Robot Spike)

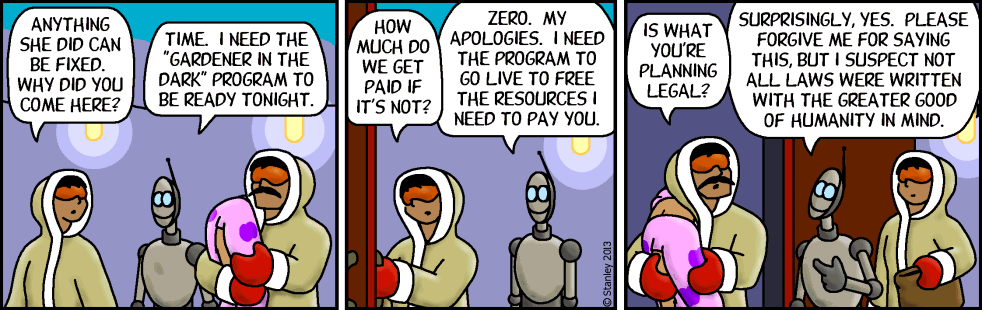

Freefall 2399

Meanwhile, down at the south pole

[!0.987]2013-09-16

Color by George Peterson

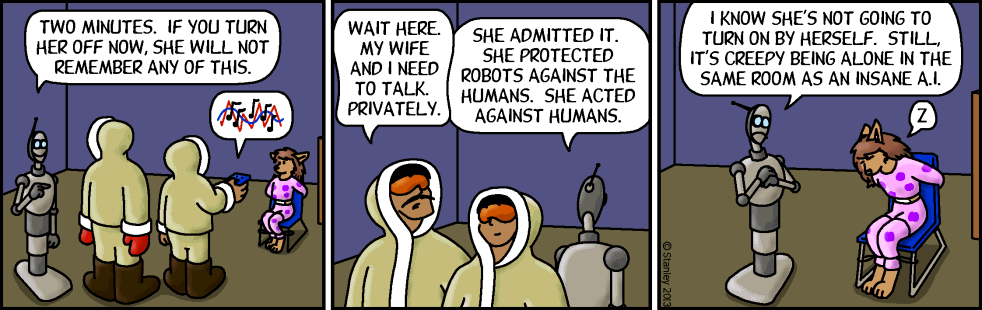

Freefall 2400

Meanwhile, down at the south pole

[!0.987]2013-09-18

estimated sound of remote

Color by George Peterson

Clippy is quite “human” (the machine can't be afraid of itself), and self-destructive -how human of him\.

UPD Although, come to think of it, he's disconnected from the Commnet, his level of corporate access may well mean better programming and a different way of updating and sleeping. There's a good chance he'll kill everyone and himself stay (Robot Spike)